The two friends of a distributed systems engineer: timeouts and retries

As a software engineer, I find distributed systems very interesting. After working with them for years, I still find them intriguing. It’s amazing to see different autonomous systems coordinate to achieve a goal like higher reliability.

But as I wrote in my previous blog post, distributed systems can also be a source of problems and headaches. There I wrote about why you should think twice before building a distributed system.

This time I want to talk about the two cornerstones of all distributed systems: timeouts and retries.

Timeouts

When you make a call across the network to another system, you might never get a reply to that call or the reply might be delayed. The target system can be down, overloaded, the network is partitioned, a switch is dropping packets, a tornado destroyed a datacenter — or all of these combined. So it's useful to set an upper limit on how long you want to wait for that response before giving up.

What happens when there isn’t a timeout?

Let’s look at how the lack of timeouts affects a distributed system and its clients.

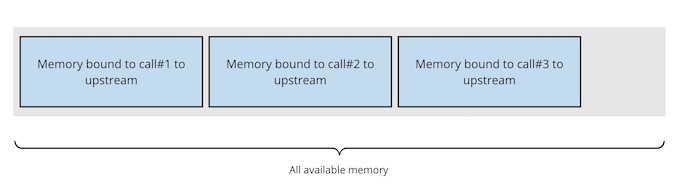

For each call to an upstream component, some of the caller’s resources are bound to that call for its duration (e.g., CPU, memory, file descriptors, pool resources, etc.). We want these resources freed as fast as possible to avoid running out of them.

When these resources are not freed, things can rapidly get out of control. Imagine we have a component that receives 100 call/s, and for each, it makes another call to an upstream component without setting a timeout. Each request in the caller uses around 200KB of memory and every call hangs because the upstream has crashed. In one minute you would have accumulated ~1GB of memory to in-flight requests (1 minute * 60 seconds * 100 req/second * 200 kb/request). In this situation, your service could crash because it ran out of memory.

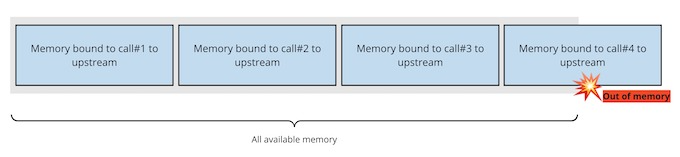

From the point of view of the customer, things won't look good either. If the client is making calls sequentially (i.e., waiting for the current call to finish before making the next one), not having a timeout could impact its throughput significantly. One hanging request would block all further work a client would want to do.

Timeouts are important because with them the components would release the memory and other resources early, lowering the chances of running out of any of them and also shortening the request/response cycle for our clients.

Things to keep in mind when using timeouts

Timeouts are quite a straightforward concept but there are nonetheless a few things that you have to keep in mind when using them.

Always set a timeout

It's easy to forget to set a timeout. Unless explicitly tested, you won't catch this on your unit or integration tests and everything will work fine in production until it doesn't.

At Contentful, we wrapped an open-source Node HTTP client with our own logic to, among other things, force each HTTP call to include an explicit timeout. The wrapper is written in TypeScript and the typing system will complain if the client is used without a timeout and throw an error if called.

Picking a good timeout value

When picking a timeout value you have to consider product, usability, and UX factors. For example: Does it make sense to keep a user waiting for a call that will timeout in 10 seconds or would it be better to timeout earlier? You also shouldn't pick a timeout value that's too low, which would cause frequent failures, but not too high to keep too many resources bound to a request that will eventually fail.

A rule of thumb to pick timeout values is to look at the P99 response times or the SLO of the service you are calling and add some buffer on top of it as your timeout value.

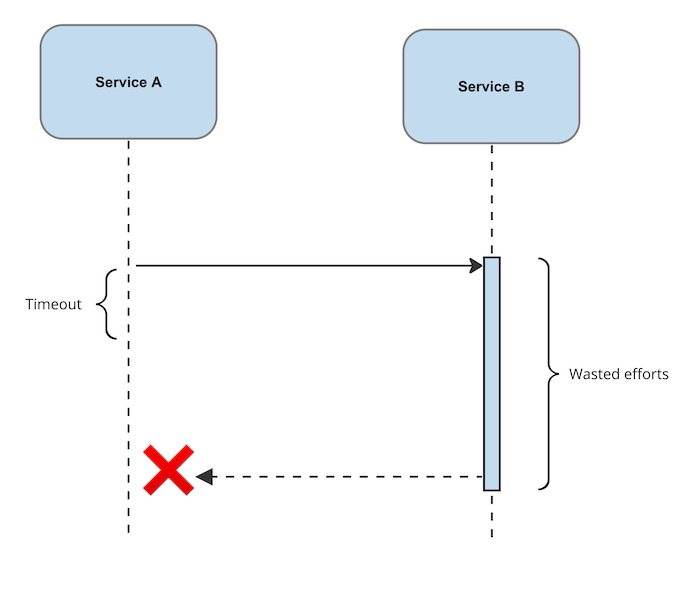

Abandoned requests

When a request times out, the caller service will abort the request, free up the resources and move on. Unless handled explicitly, the callee might continue executing the request until it completes it and tries to return the response to a caller which is long gone. If the callee is already overloaded, spending resources on requests that won’t be used is wasteful.

We cover in the following section a possible mitigation to this problem.

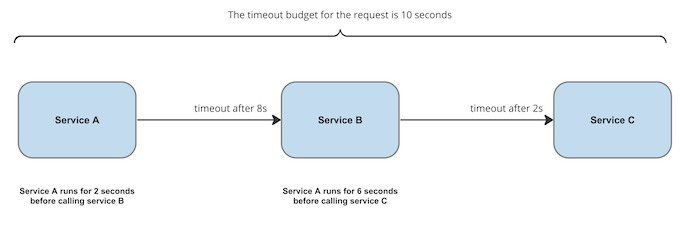

Request timeout budgets

Let’s say that an operation has a timeout of five seconds. Following what we said above about abandoned requests, any work related to that request that hasn’t finished in those five seconds would be wasted work.

One way to solve this is to think about the timeout as the budget the request has as it traverses through our system. Each component must keep track of how much time it has spent processing a request before making a call to an upstream system and include the remaining time in the call (e.g. in the HTTP headers). The upstream takes the timeout budget and repeats the process, aborting if it runs out of time.

With timeout budgets, we can abort in-flight requests deep in the system and reduce the amount of wasted resources.

Retries

When calls to an upstream fail (either because of a timeout or some other reason) it's common practice to retry these calls. With retries, we can handle transient errors and improve the customer’s experience.

Improved customer experience

With a timeout, calls will fail instead of hanging potentially indefinitely, which is good because we can get back the resources bound to the request. But on the flip side, these errors will bubble up to the customer even when the problem was transient: maybe the database of an upstream service was overloaded and it took longer to service your request.

To hide these temporary glitches, you could use retries. With retries you would execute the call a few more times with the expectation that one of them will succeed. Note that retries apply to timeout errors as well other types of transient errors.

Traffic spikes

Another reason for using retries is to be able to handle spikes in traffic. If your system receives a spike in traffic and some of the calls to its upstreams fail, you can “send the failed calls to the future” with the expectation that in the future, the spike is over and the system can process the requests without failing. Note that if the increase in load is sustained in time, then retries won't help your system handle it. You would be sending requests to a future where the system continues to be overloaded. It's also possible that in cases like these, retries aggravate the overload of the system.

Things to keep in mind when using retries

If your systems use timeouts and retries then you will be okay in most situations. But there are several possible downsides that you should be aware of. Following is a list of some of the things that we keep in mind when designing systems where retries are used.

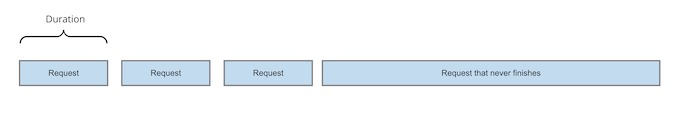

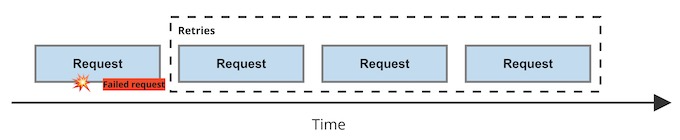

Drop in throughput

Whenever requests are retried, you are delaying the completion of a piece of work. This has a direct impact on how much work per unit of time is your system capable of doing. Too many timeouts and retries would affect the throughput.

In the image above the first request fails and it’s retried up to three times, delaying the response and reducing the throughput of the systems.

SLA violations

If you have an SLA (service level agreement) with your clients, then you should take the value of that SLA into account when setting the timeout and the number of retries. Consider, for example, an operation which has an SLA of one second for a successful response. If your service has a timeout of 500ms and it retries up to three times, then it could easily go over the SLA value if it retries more than once (i.e., response time can be > 1.5 seconds).

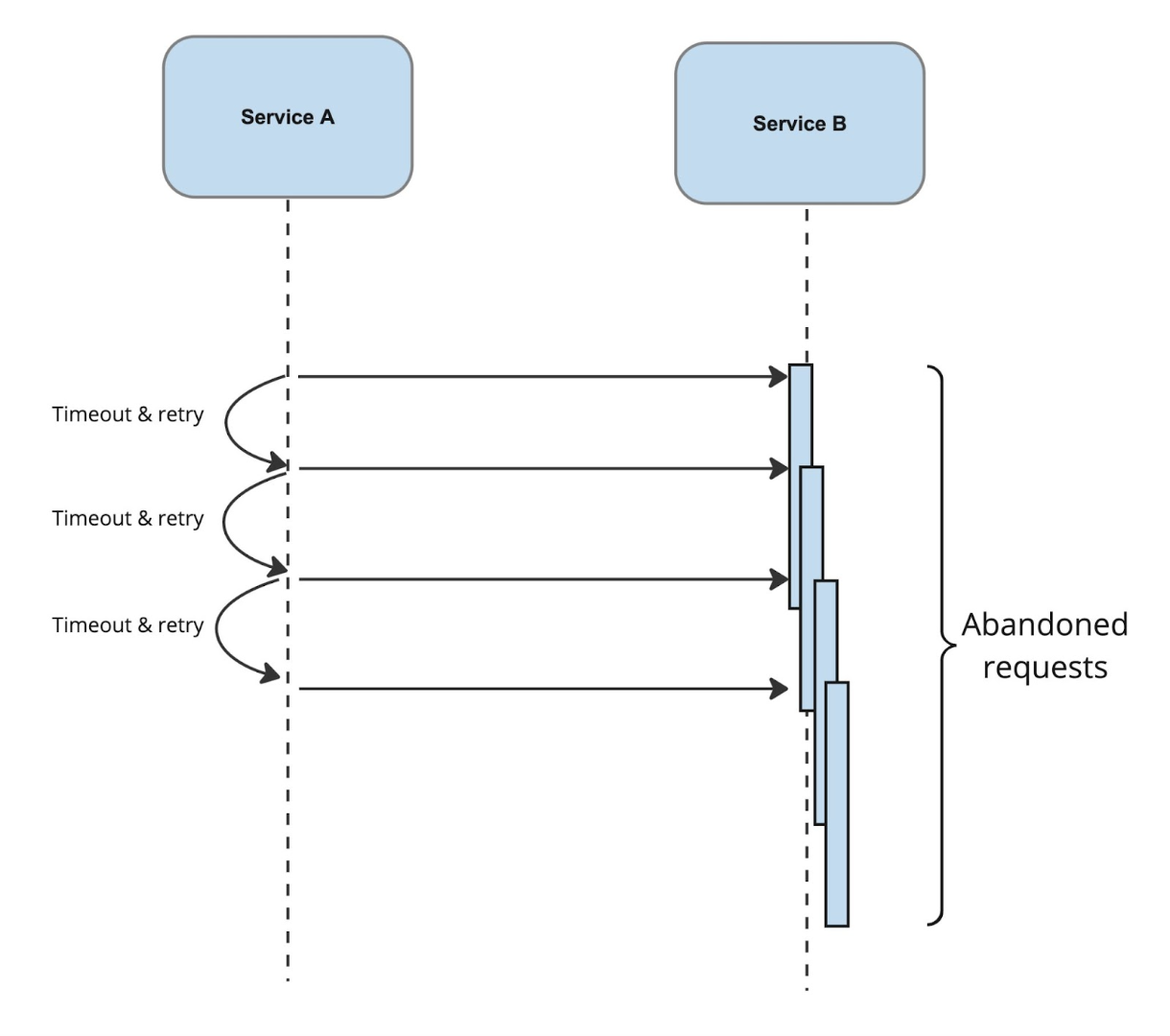

Ballooning abandoned requests

When a call from your service to an upstream server times out, most likely the upstream service will continue executing it even though the original caller is no longer waiting for a response. If you continue retrying and timing out requests, the number of inflight requests on the upstream services can grow to a point where it can hurt the upstream service performance and availability.

Exponential backoff and jitter

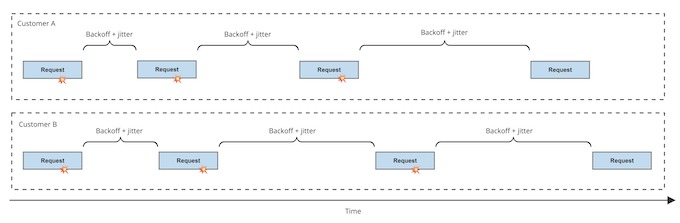

When you retry calls, you should follow a strategy that avoids thundering herds, where calls that failed at almost the same instant are also retried at almost the same time. If the calls failed because the upstream service was overloaded, retrying them at almost the same time could prevent the service from recovering or could worsen the situation.

You should use a retry strategy that uses exponential backoff and jitter. With an exponential backoff, we wait longer on every retry, giving more time to recover to a potentially overloaded upstream. With jitter we introduce some randomness to avoid thundering herds.

In the image above, we have two clients making requests. Both their initial requests fail at almost the same time but are retried at different moments because we use jitter. Notice also how the retries are done with increased delays due to the backoff.

Retry sparingly

Not all requests can or should be retried. For example, if a call to the upstream got a response `400 - BadRequest` then it shouldn't be retried. If the request failed once because it's malformed, retrying won't change the outcome.

As a rule of thumb, you can consider retrying requests which get a response with the following status codes: 429, 500, 502, 503 and 504. Note that the semantics of each of these status codes might be different depending on your environment, so make sure you know on which cases you can get each of them. Finally, if you are making calls to an eventually consistent system, it can be practical to retry a 404 because on the retry, your request can be handled by another replica which does have the resource you are looking for.

Beware of side effects

When retrying calls, you should be aware of potential side effects. Imagine, for example, a call to charge a customer for their purchase. Without treating it with care, we could charge the customer multiple times, once for each retry.

Some calls can always be retried safely. For example, fetching a record. We refer to these calls as idempotent. Calls that are not naturally idempotent can be made so with some care. For example, the upstream could keep track of the progress of a request and only execute the pending parts on retry.

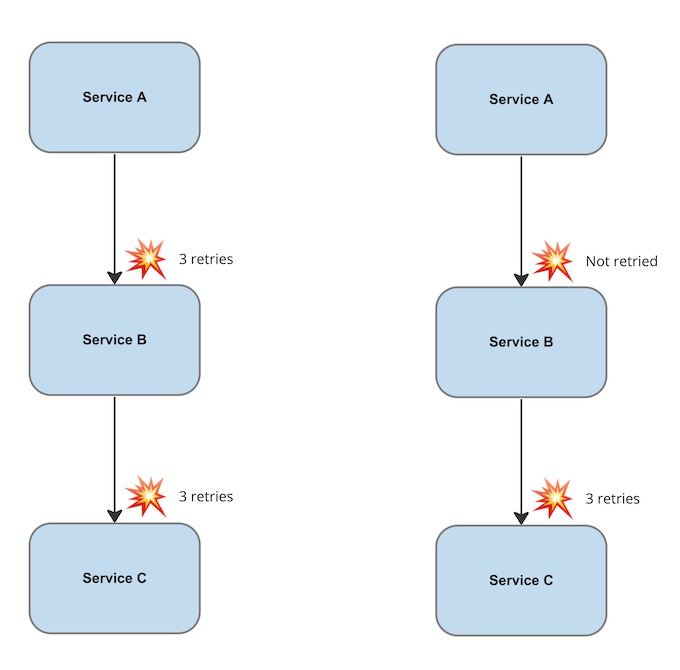

Retry only on one level

A failed call shouldn’t be retried in more than one place. Take the system in the image below. A call from component B to C fails and B retries three times before giving up and returning an error to A, which retries again three times. In total, C would have been called nine times.

Instead, if a call from B to C fails, only B should retry. And if the call keeps failing, B must return an error to A indicating that the call is not retriable.

Retries per request, client or server

The number of retries that can be made can be scoped at different levels. The most common case is to consider the number of available retries at the individual request level. One failed request can be retried N number of times. Above that, we can think of retrial budgets per server. A server might only tolerate a fixed number of retrials per unit of time to protect itself. Lastly, we can apply retry budgets per client or customer. If the requests from one customer fail continuously, it can be practical to not retry them while the underlying problem is resolved.

Load shedding and backpressure

When the upstream service rejects your call because it’s overloaded, a retry from your service would have little chance of succeeding. Not only that, the retry will continue to add load to an already overloaded system. These retries can end up tipping the system into a state from which it can’t recover (see metastability).

To avoid a situation like this, the upstream should shed any additional request it can’t handle currently and indicate (backpressure) that it's overloaded so its consumers postpone or avoid sending further requests.

Conclusion

Engineering is deciding which tradeoffs you are willing to take and understanding the impact of each of them. When you are building a distributed system, you will have to make tradeoffs to decide how the system will behave in the face of errors or long execution times.

With timeouts and retries, you have tools to increase the availability of the systems you build and operate, but as we discussed in this post, you have to consider the impact of these measures on the customer experience and the system. Timeouts and retries can help you keep your system up and running even when exceptional situations arise but can also help bring it down faster when they are not properly used.

At Contentful, we take high availability very seriously and work continuously to improve it. We monitor the interactions between our systems and act when there are communication patterns that are unexpected or unintended. Several times this has led to tweaking the configuration of timeouts and retries in some parts of our stack.