Understanding the different types of AI agents and how they work

Published on February 13, 2026

Generative Artificial Intelligence has quickly moved past static chatbots into systems that can reason and take actions on their own. Modern workflows tap into advances in reasoning through AI agents, which are capable of reshaping how companies can deliver richer user experiences. With AI agent-driven automation slowly taking over, now's the perfect time to understand the role of different types of AI agents, their underlying architecture, and how you can use them in real-world scenarios.

What are AI agents?

Traditional AI systems require heavy steering, making them more static and dependent on a developer's guidance. They essentially function as rule-based systems, fitting in AI functionality (like chatbots) within rigid pre-engineered pipelines. Even large language models (LLMs) only generate outputs and can’t act independently or interact with external systems.

AI agents emerged from the need for AI to be proactive and handle new cases developers couldn’t account for by being active decision makers. By adding the ability to reason, set goals, and take steps to achieve those goals, agents use their “intelligence” to adapt to their environment.

You can think of an agent as a system built around a reasoning component — of which LLMs have become the most prominent — that understands inputs and autonomously takes steps to achieve specific goals within a digital ecosystem. As these decisioning systems are based on natural language, they can be configured by any subject-matter expert, removing the need to translate human reasoning into rigid programming conditions.

What makes AI agents so popular is their ability to be customized. This gives them a modular design, which makes them scalable and easy to fit into almost any AI workflow.

Breaking down how AI agents actually work

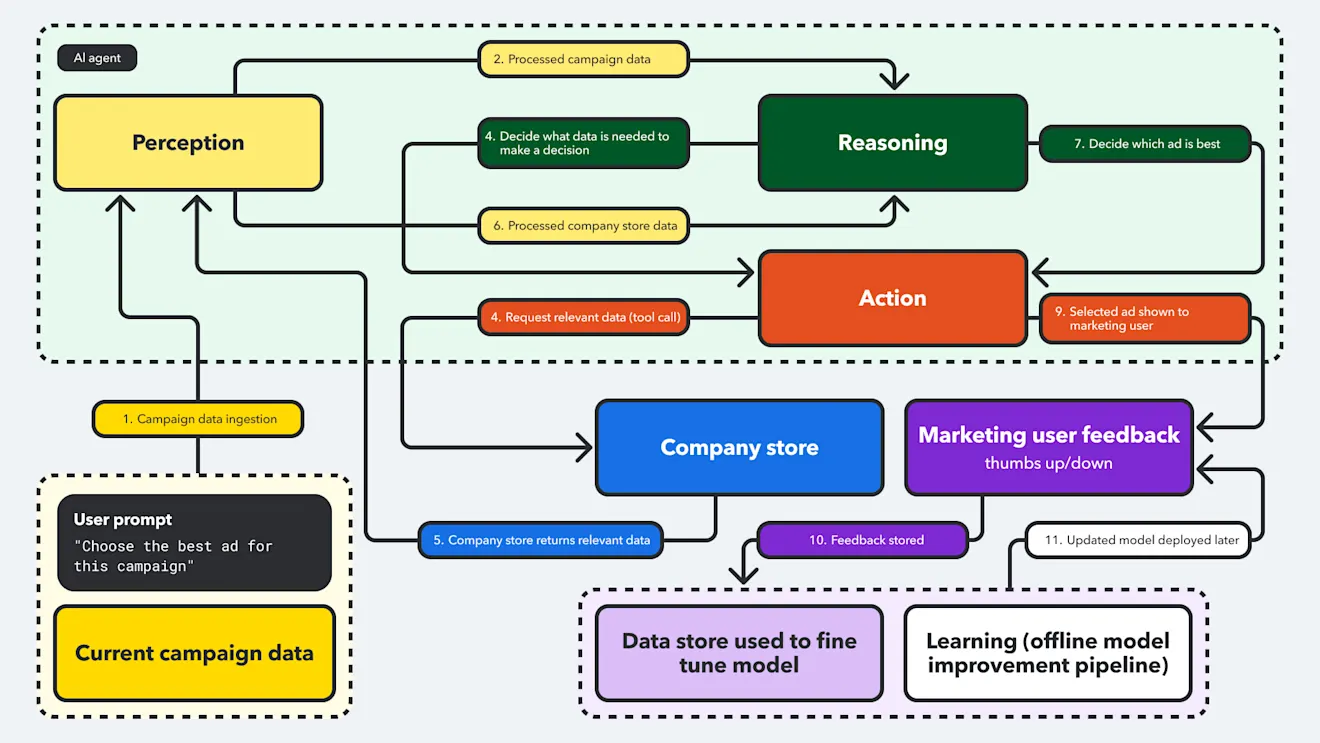

Once an AI agent has been given a goal, it’ll try to accomplish the goal through perception, reasoning, action, and learning. These building blocks of AI agents are described below.

Perception: The ability to take in data and contextual information from the agent’s digital environment and convert it into a format suitable for reasoning. This data can come from APIs, databases, or user inputs. For example, a chatbot “perceives” whatever a user enters and uses it as context for a large language model (LLM), so it can then perform reasoning.

Reasoning: This component is typically powered by LLMs or other AI models and gives agents their intelligence. It uses the input perceived from the ecosystem to decide on appropriate actions. For example, if a user asks the LLM if they can buy the new iPhone, the LLM would first fetch inventory data and reason whether it’s available.

Action: Agents execute decisions by transforming the outcome from the reasoning step into actionable outputs. In modern agents, this process (known as “tool use”) involves using AI’s reasoning to decide when external services are needed to perform specific tasks. For example, if an agent is designed to boost keyword performance, it can access an external tool that gives a detailed breakdown of keywords based on SEO. Because agents are usually linked to external services via APIs and orchestration platforms, it's important to define which actions they're allowed to take.

Feedback and memory: The new wave of AI agents that are based on LLMs don't learn or update themselves while they're running. While mature systems could still use offline fine-tuning to update model behavior, newer AI agents can store and interpret feedback as they perform tasks. Instead of feedback having to be reviewed, cleaned, and processed by a data science team before model rollout, the agent learns by interpreting the stored feedback. This process drastically reduces feedback loops and allows for quicker optimization.

For a simple example of how an AI agent uses all four of these components, consider a marketing team that uses an AI agent to choose the best ad for a campaign, based on a company’s campaign data.

First, the agent perceives all marketing and campaign information from the company’s data stores or social pages. Then, based on a reasoning system (rule-based decisions or large benchmark models), it finds the optimal asset. The action component chooses the asset and the learning component closes the loop through a feedback mechanism: If the asset gets a lot of engagement from users, the agent incorporates this information into its decision-making, updating its contextual memory and improving future asset selection.

Different types of AI agents

Agents can be grouped into the following types based on how they utilize their perception, reasoning, and decision making.

Simple reflex agents

These were once defined as agents, but since they act purely on predetermined rules, they are no longer considered agentic today. These agents have no memory, so they can't use context from any past inputs — they are limited to simply reacting to inputs using "if → then" logic

Despite not really being agentic, simple reflex agents are still useful for simple, pre-defined tasks that follow a routine and don’t expect any deviations from their expected behavior. An example of a simple reflex agent is an automated system for spam detection that removes emails whenever it detects known malicious patterns.

Model-based reflex agents

Model-based reflex agents follow a similar mechanism to reflex agents, but they also keep track of previous states, so they can use past information as well as current input to help inform their actions.

These agents are great for prediction tasks that need to track past data/interactions to predict an outcome. For example, in the spam detection task mentioned above, a model-based agent could use an AI model to tag malicious content and trigger an account lock if the number exceeds a threshold.

Goal-based agents

These agents plan actions to achieve a target goal, usually involving planning algorithms to take the optimal sequence of steps.

Naturally, goal-based agents shine for complex tasks that involve multiple steps and require some foresight. For example, conversational agents (chatbots) have a goal state pre-baked into them (this could be something simple like “answer the user’s queries” or more complex like “book an appointment”), and their purpose is to guide a user to that goal successfully through a series of multi-turn dialogues.

Utility-based agents

Utility-based agents evaluate the utility of different outcomes of a product or service and choose the option with the most benefit to reach a preferred result.

These agents come into play when there are multiple goals and the utility of each goal needs to be weighed — particularly when they conflict with each other. For example, a content strategy agent optimizing for SEO may need to choose between trendy but controversial keywords vs. more stable, low-risk keywords.

Learning agents

As the name implies, learning agents improve their logic by learning from previous interactions. Learning agents use a feedback mechanism that involves a critic, that evaluates how well the agent performed and uses the feedback to update the internal rules and knowledge base. This type of learning is done while the agent is running, and the feedback can also be used to re-train the model.

These agents are best deployed in changing environments that are hard to predict. For example, personalized social media feeds can’t rely on static LLMs and need to update their strategy constantly. Learning agents learn by reacting to their environment and using reinforcement learning to find the most effective strategy. For example, an agent designed to control social media feeds could update what's shown based on the number of clicks and the average duration consumers spend reading a post.

Hierarchical agents

A hierarchical agent is a single agent with a series of internal sub-agents below it. The higher-level agent breaks down complex tasks into a sequence of subtasks, assigns an overall goal, and delegates the intermediate steps to its sub-agents, setting up a separate planning and execution phase.

Hierarchical agents are adopted for tasks requiring coordination between different specialized components. For example, to optimize a landing page, a high-level agent could make a strategy that’s sent to mid-level agents for testing, and ultimately to low-level agents for carrying out changes.

Multi-agent systems

Multi-agent systems, as the name suggests, involve multiple independent agents working together to achieve a solution. These agents achieve this through a decentralized approach of dividing tasks and collaborating to achieve short- and long-term objectives.

An example of this is a multi-agent content creation workflow. This could be structured as a pipeline, where one agent researches data, another agent drafts the content, and other agents handle referencing and editing.

It’s important to take note of cases where AI solutions combine different categories of agents, because not all agents fall neatly into a single category. Based on their use case and scope of flexibility, agents can also be pre-built or custom made.

Many solutions use ready-made agents that can be deployed off the shelf. These agents come loaded with pre-baked roles, tools, and instructions and usually allow for only minor adjustments to the goal and the parameters guiding it. Custom AI agents, on the other hand, are designed to be more flexible and suitable for teams looking to implement their own logic through proprietary tools/APIs and company-specific reasoning pipelines.

Evolving digital content workflows with AI agents

AI agents are already changing workflows for different content-related business operations. Since digital content is so readily available and AI models benefit from the surplus of data, integrating AI agents into these workflows is relatively straightforward. These agents have become integral to tasks that involve automating content creation and personalizing content to match a company’s brand voice.

The table below shows how each AI agent type can be used for content workflows and their benefits to devs, content teams, and digital leaders.

Type of AI agent | How it works | How it benefits digital content |

|---|---|---|

Based on architecture (How the agent “thinks”) | ||

Simple reflex agent | Responds to rules and triggers. | Auto-tags assets and filter spam and duplicate content. |

Model-based reflex agent | Uses an existing internal model that adapts to external actions. | Prioritizes emails based on intent, tracking different stages of publishing info. |

Goal-based agent | Plans steps to achieve a pre-defined objective. | Schedules global campaigns. Helps digital leaders and marketing teams coordinate complex content goals and hit deadlines. |

Utility-based agent | Balances multiple goals and maximizes the value of a product. | Has personalization options (optimizing asset selection based on engagement, compliance, branding etc.). Helps product owners and content strategists focus on the real-world impact. |

Operational Modes (How the agent evolves and scales)

Learning agent | Learns from feedback to improve over time. | Helps developers and content teams improve user experiences using a recommendation agent that works out the best content to display for each audience segment. |

Multi-agent system | Agents collaborate with each other to handle different parts of a workflow. | Performs large-scale content enrichment (generates tags and localized variations of content for thousands of assets simultaneously). |

Hierarchical agent | A (higher access) orchestrator delegates subtasks to agents with specific access levels. | Manages workloads by using multiple specialized agents. Manages multi-stage campaigns and cross-team collaboration. |

Best practices for managing AI agents in digital content workflows

Managing high-volume streams of data in digital content workflows can get overwhelming, so it's necessary to have a standardized set of best practices to fall back on.

Optimize your AI agent setup: Layer different types of AI agents to match their specific strengths while designing a workflow. For example, in a content workflow, use simple reflex agents for tagging metadata and more complex utility-based agents for categorizing and generating content. It’s also good practice to break complex tasks into sub-problems and assign specialized agents for each one.

Monitor your AI agent: Use humans to review critical steps. Don’t let AI publish content without a human in the loop (HITL). Preserve brand safety by specifying acceptable and unacceptable tasks an AI agent is allowed to do. For example, you could allow the agent to make suggested content edits or add tags, but not publish content. You ideally want to train your agents based on this feedback, building their learning component to remove manual intervention and streamline your workflow.

Build in explainability into the agentic systems: Teams need to understand why an agent made a particular decision. Catch issues like biased outputs and AI hallucinations by building in traceability into your AI agents. You can achieve this through multi-agent collaboration and by incorporating feedback loops with specialized debug and validation agents designed to catch errors. You can also build in explainability using audit trails.

Try Contentful’s AI-powered platform and experiment with how smart automation can transform digital content workflows

Contentful speeds up content delivery through AI Automations, which automate workflows, giving digital content agents the agency to execute AI Actions in bulk across the content management system (CMS). These agents are easily integrated through specialized APIs (for content management and delivery), SDKs, HTTP requests, and webhooks, giving teams the ability to handle tasks like asset tagging, multilingual content, compliance checks, and keeping content synced up.

Leveraging the power of content models also offers structured data to different digital content agents, helping them take precise actions during tool calling. Digital content is rarely neatly organized, so because Contentful supporting different content types, agents can understand data relationships and easily organize them using metadata information (like SEO summaries and campaign tags). To maintain consistency, the AI Suggestions feature helps catch issues like policy violations and editing mistakes before content is live, and AI-generated content traceability in Audit Logs improve accountability by providing a neat decision trail of all AI activity.

Now that you understand different types of AI agents, it’s time to start experiencing smarter digital experiences by testing some off-the shelf AI agents directly within the Contentful Platform.

Inspiration for your inbox

Subscribe and stay up-to-date on best practices for delivering modern digital experiences.