Set up Audit Logs

Table of contents

- What are audit logs?

- Audit log delivery

- Event details

- Events captured by the audit log

- Audit log file naming convention

- Data retention period

- Requirements

- Audit logs set up

What are audit logs?

Audit logs allow customers to track and view all the changes made in their organization. They provide visibility and are useful for investigating an incident or getting a detailed report on relevant events (such as changes to roles and permissions, users invited, spaces deleted, etc.).

The audit logs feature securely transfers this information to your own storage (an AWS S3 bucket or Azure Blob Store), ensuring that you have a clear and accessible history of actions for monitoring and analysis purposes.

Audit log delivery

Audit logs are shipped to your AWS S3 Bucket or Azure Blob Store. Storing the audit logs in storage that you own helps you to have control and will allow you to ensure that audit logs are kept for as long as necessary. By storing the data in your own storage you have the following benefits:

- Consistency: This way you can apply the same rules and policies to this as you do for other similar data. You can control who has access to it.

- Data retention: This enables you to store it for as long as you need to maintain compliance for your company.

- Data analysis: And it allows you to serve this data to the tools you already use for analysis.

Events captured by the audit log

Audit logs capture all changes made through the Content Management API, focusing on actions and modifications across the entire Contentful organization. This includes any content that has been recently created, updates, deletions, and configuration adjustments performed by users or apps interacting with the CMA.

| Entities | Actions logged | |

|

|

| Activity Id | Activity Name | Description |

0

|

Unknown | The event activity is unknown |

1

|

Create | One or more resources were created. |

2

|

Read | One or more resources were read / viewed. |

3

|

Update | One or more resources were updated. |

4

|

Delete | One or more resources were deleted. |

5

|

Search | A search was performed on one or more resources. |

6

|

Import | One or more resources were imported into an Application. |

7

|

Export | One or more resources were exported from an Application. |

8

|

Share | One or more resources were shared. |

99

|

Other | The event activity is not mapped. See the `activity_name` attribute, which contains a data source specific value. |

Category

The category properties refer to the OCSF categories and subcategories under which a specific log falls. As stated earlier at this moment all audit logs fall under the Application Activity category and more specifically the Web Resource Activity sub-category this may expand in the future but for now integrations can be built around this type of log specifically. Below is a description for each property that is relevant to the category in this log.

| Property | Description |

category_uid

|

The category unique identifier of the event.6 - Application Activity Application Activity events report detailed information about the behavior of applications and service |

class_uid

|

The unique identifier of a class. A class describes the attributes available in an event.6001 - Web Resources Activity Web Resources Activity events describe actions executed on a set of Web Resources. |

type_uid

|

The event/finding type ID. It identifies the event's semantics and structure. The value is calculated by the logging system as: class_uid * 100 + activity_id.

|

Actor

The actor property describes the user or app that acted on the resource by providing their user_id.

| User type | Description |

user |

This action was performed by user either in the Contentful Web App or the Contentful Command Line Interface. Note: The user_id field only displays a unique identifier for the user (e.g., 2EHL4afAmn1k10yd6dJxcl). To get a full list of users with their names and email addresses, you can use the User Management API |

app |

This action was performed by a Contentful market place application. |

Severity

As quoted in the OCSF documentation the severity_id is “The normalized severity is a measurement of the effort and expense required to manage and resolve an event or incident. Smaller numerical values represent lower impact events, and larger numerical values represent higher impact events.” Below is a table mapping each ID to its description.

| Severity ID | Description |

0 |

Unknown The event/finding severity is unknown. |

1 |

Informational Informational message. No action required. |

2 |

Low The user decides if action is needed. |

3 |

Medium Action is required but the situation is not serious at this time. |

4 |

High Action is required immediately. |

5 |

Critical Action is required immediately and the scope is broad. |

6 |

Fatal An error occurred but it is too late to take remedial action. |

99 |

Other The event/finding severity is not mapped. See the severity attribute, which contains a data source specific value. |

0 severity as we look to gather feedback and overtime identify the estimated severity of each request.HTTP Request

The http_request object describes the original HTTP request that resulted in the log, the below table provides further details on what each property means.

| Property | Description |

http_method |

The type of HTTP Method used in the request. At this time GET requests aren’t supported. During the current Beta the focus is only on requests that alter state on the Contentful platform. |

referer |

The referer identifies which url the request came from. |

url |

An object containing details about the url requested in the log. |

url.path |

The full path that was called in the request. |

HTTP Response

This http_response object contains the code referring to the HTTP Response code that the query resulted allowing for it to be established if the request was successful or not.

Enrichments

The enrichments property provides an array of objects that enrich other properties in the logs. More details can be found in the OCSF docs. Given the example log above each property would mean the following:

| Property | Meaning |

"name": "http_request.url.path" |

This means that the path property from inside the url property which is inside http_request property is being enriched with additional data. |

"type": "Space" |

This means that the enrichment will be providing some information about a space. The types will refer to entity names in the Contentful API documentation. |

"value": "/spaces/<space_id>/environments/<environment_id> |

The original value without any enrichment. |

"data": |

The object containing the additional data that will enrich the original value. |

{ "space_id": "<space_id>" } |

In this scenario the space_id is provided meaning that there is no need to parse the URL to retrieve this value. |

Web Resources

The web_resources property contains an array of entities that were affected by this request these can be environments, locales, content types or entries, etc. The properties of the objects contained in the array are as described below:

| Property | Meaning |

type |

This will be the name of entity the entity type of the affected resource represented in the Contentful API documentation for example an Entry or a ContentType |

uid |

The id of the affected resource for example the id of the entry or the name of the content type. |

Metadata

The metadata property contains miscellaneous data that doesn’t fit well in the rest of the log but may still be useful. At this moment only two properties can be found in this object and the below table describes them.

| Property | Meaning |

version |

The version of the OCSF schema this log adheres to. |

uid |

This is a unique Contentful request ID that can be provided to Contentful support when investigating a log entry and would be useful to the Support person in tracing more information internally about what happened. |

Requirements

AWS or Azure account: An active AWS or Azure account is necessary.

Stopping the audit logs delivery

To stop the delivery of the audit logs, please contact our support

Audit logs set up

To set up your infrastructure to receive Audit Logs, you will need to make some configuration changes and share some information with Contentful.

The exact process is dependant on your storage provider. Please follow the appropriate guide from the list:

Audit logs AWS Configuration

As part of enabling audit log shipping to your AWS S3 bucket, you need to create an AWS IAM role that Contentful can assume. This will allow Contentful to securely transfer audit logs to your AWS S3 bucket without the need to store any credentials.

Security of IAM role assumption

Contentful uses AWS IAM role assumption to securely deliver audit logs to your specified S3 bucket. This approach follows AWS’s recommended best practices for granting third-party access to your resources. With IAM role assumption:

- No credentials are shared: Contentful does not require or store your AWS credentials. Instead, a secure trust relationship is established through the IAM role.

- Explicit permissions: You maintain full control by explicitly defining which actions Contentful can perform (e.g.,

s3:PutObject) and on which resources (e.g., specific S3 buckets). - Control over the trust policy: The trust policy for the IAM role allows access only to Contentful’s AWS account and requires an external ID for added security.

- Industry standard: Role assumption is widely adopted by leading SaaS platforms, ensuring a secure, scalable, and traceable approach.

For more details, refer to the AWS IAM documentation.

Prerequisites

- An AWS account with permissions to create IAM roles and edit S3 bucket policies.

- Contentful's AWS account IDs:

- For US customers:

606137763417 - For EU data residency customers:

101997328120

- For US customers:

Step 1: Create an S3 Bucket

- Log in to your AWS Management Console.

- Navigate to S3, click Create bucket.

- Enter a unique bucket name and select the region where you want the bucket to reside. Note: you will need to enter this name later.

- Configure options as required (e.g., versioning, logging, tags, object lock).

- Review and create the bucket.

Step 2: Create a New IAM Policy

- Log in to your AWS Management Console.

- Navigate to IAM -> Policies -> Create policy.

- Select the JSON tab and paste the following policy, replacing

with the name of your S3 bucket (from Step 1). Make sure to keep the /*at the end:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::<Your-S3-Bucket-Name>/*"

}

]

}- Click Next, give it a meaningful name and description, and then click Create.

Step 3: Create a New IAM Role for Cross-Account Access

- In the IAM dashboard, go to Roles -> Create role.

- Select AWS Account under the "Trusted entity type" section, then in the section below select Another AWS account and enter Contentful's AWS account ID:

- For US customers:

606137763417 - For EU data residency customers:

101997328120

- For US customers:

- Enable the option Require external ID and insert your Contentful organization ID. The primary function of the external ID is to address and prevent the confused deputy problem. You can find the organization ID in the Contentful web app.

- Click Next, skip attaching permissions policies now (we will attach the policy created in Step 2).

- Review, name the role, and then create it.

Step 4: Attach the Policy to the IAM Role

- Go to the newly created role in IAM -> Roles.

- Under "Permissions" in the Add permissions dropdown, click Attach policies.

- Find the policy you created in Step 2, select it, and then click Add permission.

Step 5: Configure Your S3 Bucket Policy

- Go to S3, find your bucket from Step 1, and then click Permissions.

- Edit the Bucket policy and add the following statement, replacing

with the ARN of the IAM role you created in Step 3 and <Your-S3-Bucket-Name>with the name of your S3 bucket. Make sure to keep the/*at the end of the bucket ARN:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "<Your-IAM-Role-ARN>"

},

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::<Your-S3-Bucket-Name>/*"

}

]

}- Save the changes.

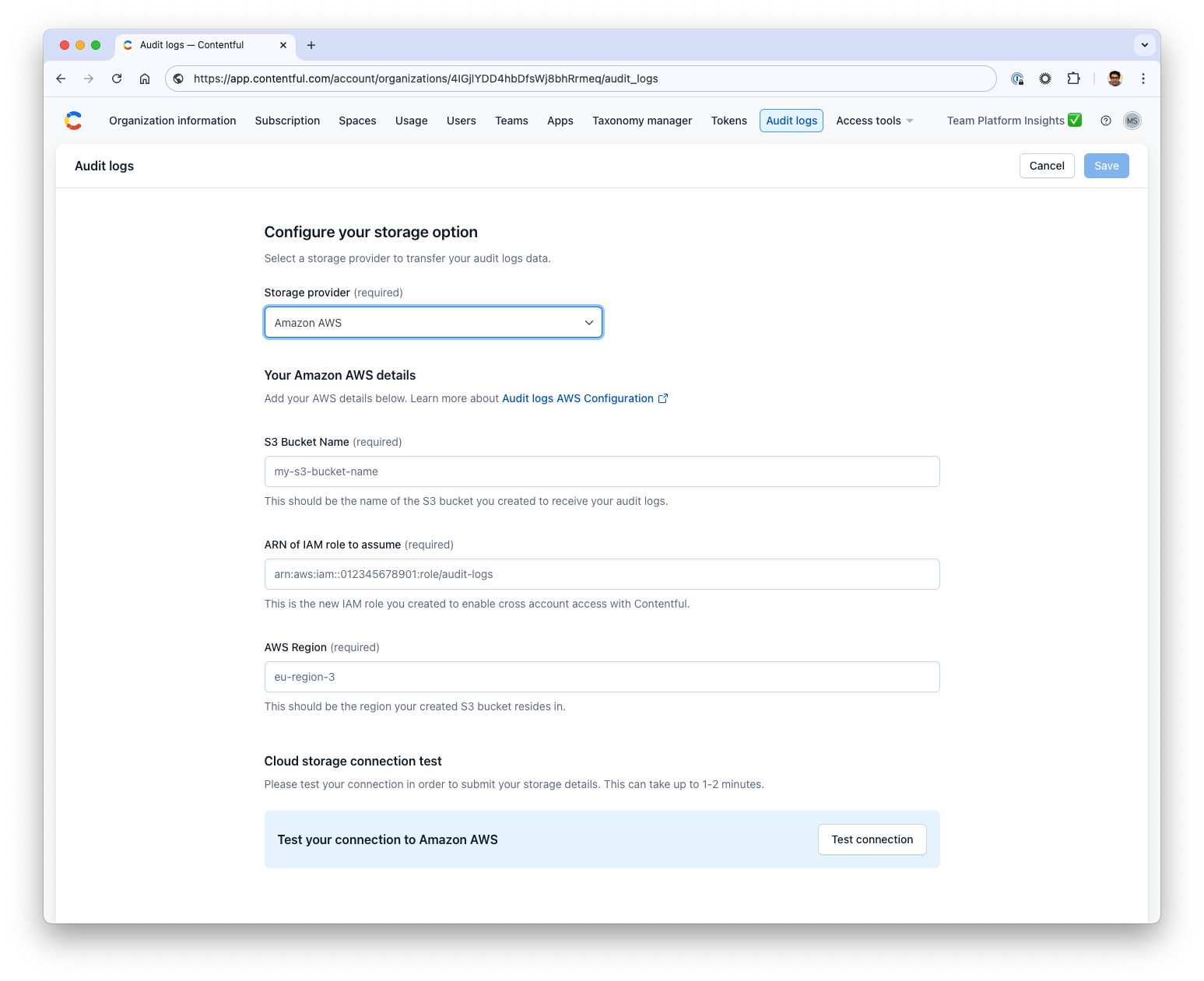

Step 6: Configure AWS S3 storage in the Contentful web application

Note: Only organization owners and organization admins can add and edit the storage details in Contentful.

- In Contentful, navigate to Organization settings -> Audit logs.

- Select Amazon AWS as a Storage provider option and fill in the form with the following details:

- S3 Bucket Name: The name of the S3 bucket you’ve created for storing audit logs.

- ARN of IAM Role: The Amazon Resource Name (ARN) of the IAM role that Contentful will assume to send logs to your bucket.

- AWS Region: The AWS region where your S3 bucket is located.

- Click Test connection to verify that the connection to your AWS S3 bucket is working correctly. During this test, Contentful sends a file to ensure the configuration is valid. If the test succeeds, you’ll see a confirmation message.

- If the connection test is successful, click Save to finalize the configuration.

Note: If the test fails, double-check that your bucket permissions, IAM Role, and policy configurations match the requirements outlined earlier in this guide.

By following these steps, you've securely enabled Contentful to ship logs to your AWS S3 bucket. Contentful will use AWS STS to assume the role you've created, ensuring a secure and efficient transfer of audit log data.

Audit Logs Azure Configuration

As part of enabling audit log shipping to your Azure Blob Storage container, you need to create a Shared Access Signature (SAS) user that Contentful can use. This will allow Contentful to securely transfer audit logs directly to your Azure Storage Account container. This guide will help you create a Shared Access Signature (SAS) user specifically for Contentful.

A shared access signature (SAS) provides secure delegated access to resources in your storage account. With a SAS, you have granular control over how a client can access your data. For example:

What resources the client may access

What permissions they have to those resources

How long the SAS is valid

We will use RSA 4096 encryption to secure your SAS Token in-transit and at rest. Access to decrypt is provided only to the Audit Logging system and a small number of people who manage the Audit Logging service. For more information please contact us via support.

Prerequisites

An Azure account with access to create a Blob Store and a SAS Token.

Step 1: Create an Azure Storage Account

Log in to your Azure Portal.

Navigate to Storage Accounts -> Create

Select the Subscription under which to create the Storage Account.

Select or Create the Resource Group for the Storage Account.

Enter a unique storage account name and select the region where you want the account to reside. Note this name, you will need it later.

Configure options as required (e.g., performance, redundancy, etc).

Click Review + create

On the Review + create page check that everything correct and if you’re satisfied click Create to create the storage account.

Step 2: Create a Container

Log in to your Azure Portal.

Navigate to Storage Accounts and click the one you created in Step 1 to open it.

On the left sidebar, under Data storage, click Containers.

In the top toolbar click the + Container button to create a new container.

Enter a unique container name.

Configure options as required (e.g., encryption scope, versioning, etc).

Review and click Create to create the container.

Step 3: Create the SAS Token

Log in to your Azure Portal.

Navigate to Storage Accounts and click the one you created in Step 1 to open it.

On the left sidebar, under Data storage, click Containers.

Click the name of the container you create in the steps above.

On the left sidebar, under Settings, click Shared access tokens

Select the Permissions dropdown, deselect Read, then select Create and Write.

Set an expiry date that complies with your secret rotation policy.

Click Generate SAS token and URL

Copy the value of the Blob SAS URL field that's displayed. You will use this URL in the next steps.

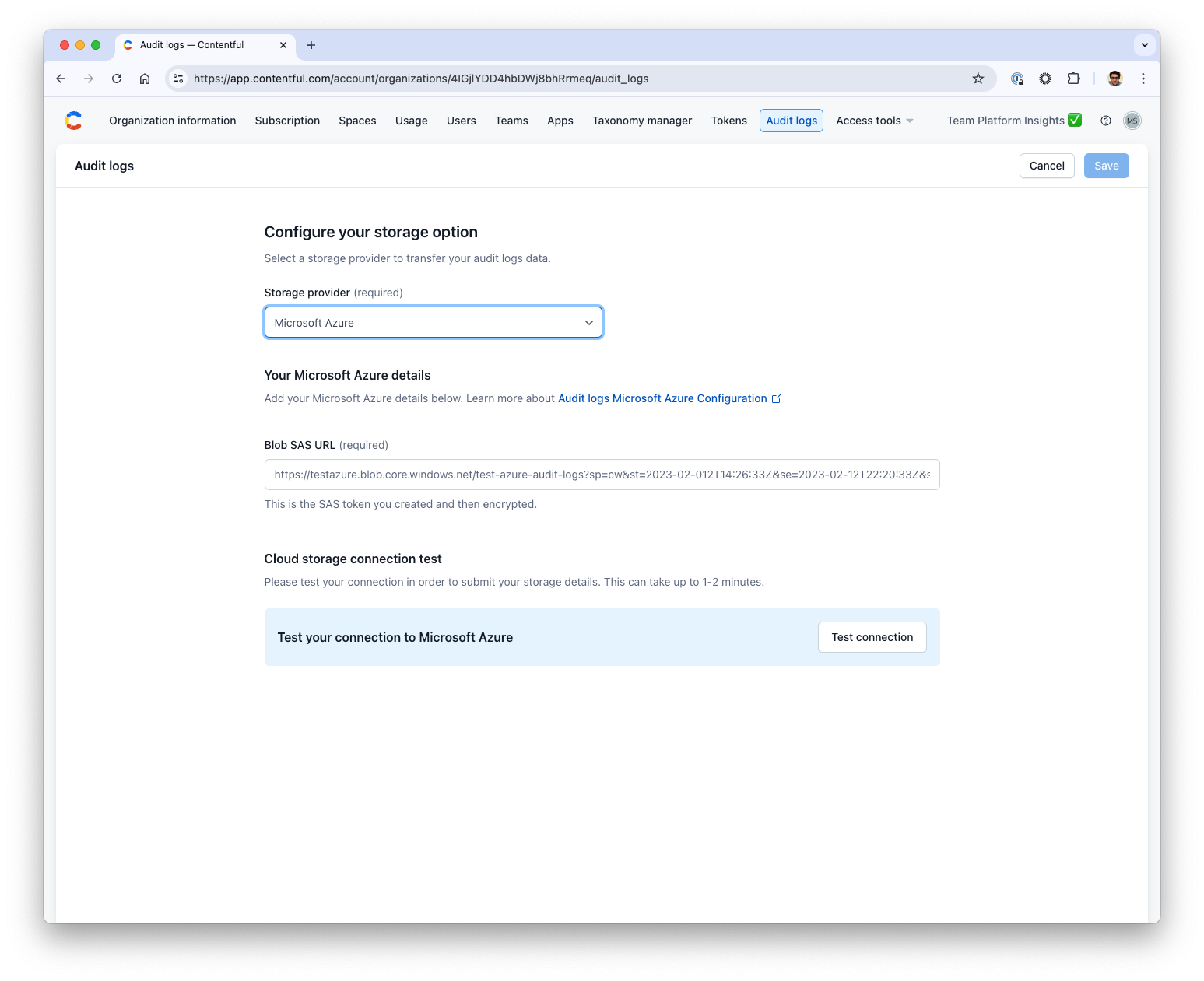

Step 4: Configure Microsoft Azure storage in the Contentful web application

Note: Only organization owners and organization admins can add and edit the storage details in Contentful.

- In Contentful, navigate to Organization settings -> Audit logs.

- Select Microsoft Azure as a Storage provider option.

- Paste the Blob SAS URL from the previous step into Blob SAS URL field.

- Click Test the connection to verify that the connection to your Microsoft Azure storage is working correctly. During this test, Contentful sends a file to ensure the configuration is valid. If the test succeeds, you’ll see a confirmation message.

- If the connection test is successful, click Save to finalize the configuration.